Free Software programmer

rusty@rustcorp.com.au

Subscribe

Subscribe to a syndicated

feed of my weblog, brought to you by the wonders of

RSS.

This blog existed before my current employment, and obviously reflects my own opinions and not theirs.

This work is licensed under a Creative Commons Attribution 2.1 Australia License.

Categories of this blog:

IP issues

Technical issues

Personal issues

Restaurants

Older issues:

All 2008 posts

All 2007 posts

All 2006 posts

All 2005 posts

All 2004 posts

Older posts

Tue, 27 Oct 2009

Finally, Rusty's Blog Moves to WordPress

Sat, 24 Oct 2009

SAMBA Coding and a Little Kernel

So two weeks back was the Official Handing Over Of The SAMBA Team T-shirt! Since then I have done my first serious push to the git tree, and received spam from the build farm about it (false positives, AFAICT).

I'm still maintinging virtio and the module and parameter code of course. But the kernel has slowly morphed into a complicated and hairy place. Formality has crept in, and the pile of prerequisites grows higher (eg. git, checkpatch.pl, Signed-off-by). This is maturity, but it raises the question: when will some neat lean OS without all this baggage come along?

SMP, micro-optimizations, multithreading and extreme portability are responsible for much of the added coding burdens, but also hyper-distributed development means many coders shy away from changes which would break APIs. The suboptimality accretes and this method of working becomes the new norm. BUG_ON() for API misuse is now seen as unduly harsh, but undefined APIs make the next change harder, and WARN_ON() tends to stay around forever.

SAMBA has some brilliant ideas which coding a joy (talloc chief among them, but there are other gems to be found). Hell, it even has a testsuite! But of course it has its own issues; the SAMBA 3/4 split, lack of the kernel's massive human resources and the inevitable code quality issues. Ask me again in a few years to do a comparison...

[/tech] permanent link

Tue, 20 Oct 2009

ext3, corruption, and barrier=1

I mentioned in my previous post that we had seen tdb corruption (despite the carefully written syncing transaction code) when power failures occurred.

I mentioned (from my previous experience with trying to test virtio_blk) that ext3 doesn't use barriers by default, and that the filesystems should be mounted with "barrier=1". (The IBM engineers on the call were horrified that this wasn't the default: I remember the exact same feeling when I found out!).

I had my tdb_check() routine now, so I patched it into tdbtool and modified tdbtorture to take a -t ("do everything inside transactions") option: killing the box should still allow tdb_check() to pass when it came back. I thought using virtualization, but this isn't easy: killing kvm still causes outstanding writes to be completed by the host kernel (nested virtualization would work). So instead, it was time to use my physical test box.

First with standard ext3. Three times I started tdbtorture -t, then pulled the cord out the back. The first two times, sure enough, the tdb was corrupt. The third time, the root filesystem mounted read only and I fscked, rebooted, same thing, fscked again, rebooted happy. Sure enough, the tdb was corrupt (and one of my previous saved corrupted tdbs was lost, another was in lost+found). I should have forced a fsck on every reboot.

So I edited /etc/fstab to put barrier=1 in, and pulled the plug during tdbtorture again. Surprisingly, I got a journal error and r/o remount again, which shouldn't happen. Still, when I did another double-fsck, the tdb was clean! Two more times (no more fs corruption), and two more clean tdbs.

So it seems, lack of barriers was the culprit. But also note that tdbtorture was 4.8 seconds without barriers, 20 or 28 seconds with them (and this slowdown itself might make errors less likely). This is worse than the 10% that googling suggested, but then tdbtorture is pretty perverse. Three processes all doing three fsyncs per commit, and a commit happening about every 10 db operations.

[/tech] permanent link

Mon, 12 Oct 2009

Fun With Bloom Filters

A few years back at a netconf, someone (Robert Olsson maybe? Jamal Salim?) got excited about Bloom Filters. It was my first exposure.

The idea is simple: imagine a zeroed bit array. To put a value in the filter you hash it to some bit, and set that bit. Later on, to check if something is in the filter, you hash it and check that bit. Of course this is a pretty poor filter: it never gives false negatives, but has at about {num entries} in {num bits} chance of giving false positives. The trick is to use more than one hash, and the chances of all those bits being set drops rapidly.

It can be used to accelerate lookups, but we never found a good use for it. Still, it sat in the back of my head for a few years until I came across a completely different use for the same idea.

TDB (the Trivial DataBase) is a simple key/value pair database in a file (think Berkley DB). It has a free list head and set of hash chain heads at the start, and each record is single-threaded (via a "next" entry) on one of these lists. My problem is that even though TDB supports transactions, there were reports of corruption on power failure (see next post!); we wanted a fast consistency check of the database. In particular, this was for ctdb: if the db is corrupt you just delete it and get a complete copy from the other nodes.

A single linear scan would be fastest, rather than seeking around the file. Checking each record is easy, but how do we check that it's in the right hash chain (or the free list), and that each record only appears once? The particular corrupt tdb I was given contained such an infinite loop, which is a nasty failure mode. The obvious thing to do is to seek through and record all the next pointers, and the actual record offsets, then sort the next pointers and see that the two lists match. But that involves a sort and would take 8 bytes per record (TDB is 32 bit, so that's 4 bytes for the next pointer and 4 bytes to remember the actual record offset).

How would we do this in fixed space, even though we don't know how many records there are? What if, instead, we allocate two Bloom filters for each hash chain (and one for the free list)? We put next pointers in the first Bloom filter, and actual located records in the second. At the end, the two should match!

But we can do better than this. Say we use 8 hashes, and 256 bits of bitmap. First off, if the 8 hashes of a value overlap already-set bits, it has no effect and we won't be able to tell if it's missing from the other filter. And if seven bits overlap others (so it only sets one unique bit) then we can't detect a "bad" value which sets that same bit and no other unique bits.

So instead of setting bits, we can flip bits in the bitmap. This means that we can detect a single extra value in one list unless it happens to cancel out its own bits (ie. the hash values all happen to form pairs), and if two values are different they'd need to hit precisely the same bits. This is astronomically unlikely (it's a bit more than 1 in 256! / (8! * 248!), but its still a very small number).

The best bit, of course is that you don't need two bitmaps: a single one will do. Since the two sets of values should be equal, it should be all zero bits when finished!

In practice, all the corrupt TDBs I've gathered have had much more gross errors. But it's nice to finally use Bloom's ideas! The code can be found in the CCAN repository.

[/tech] permanent link

Wed, 30 Sep 2009

Late Night Hacking

It's been a while, but I find myself hacking to 3am tonight (virtio_blk needed some love, and it was easier to patch it myself than explain The Right Thing to the patch submitter).

Am seriously tempted to do that tdb hacking now, but I get Arabella tomorrow and I definitely want to face her fully refreshed!

[/tech] permanent link

Mon, 28 Sep 2009

Lguest?! Really?

So, New York Times covered Sandia National Labs's using a million virtual machines to research botnets. I saw something fly by on slashdot, but didn't pay any attention.

Then a couple of IBM research guys sent me an embarrassed mail a few days ago. Senior IBM execs had seen the Sandia press release crediting lguest which "was developed by the research arm of IBM" and were wondering why they'd never heard of it. :)[1]

Upshot is: I always said lguest was a hypervisor research and education tool, but this blew me away! Ron Minnich has been submitting stuff for lguest for a while now, but I assumed he was just idling. Great stuff!

[1] Linux Technology Center is not part of IBM Research, and lguest was

just a random hack I did to help some other things I was working on (and

probably spent too much time on to justify).

[/tech] permanent link

Mon, 20 Jul 2009

linux.conf.au 2010 Submissions

Finally submitted to LCA 2010. Yes, I'm submitting the lguest tutorial for the third (and last) time; having put all the effort into it, I feel it will finally be a good tutorial.

But I wanted to talk about something else, so I made a more off-the-wall submission, on the stuff I've been doing with Arabella, the Nintendo Wiimote, and libcwiid. The hope is that if that get accepted it'll give me motivation to spend more time perfecting it!

In unrelated news, I got an email from Peter Richards who has been playing with my old pong code, and made improved IR pens. He had some Vishay IR LEDs left over, and has mailed them to me. If my paper is accepted, I'll have to figure out what to do with them!

[/tech] permanent link

Wed, 06 May 2009

libcwiid support for Guitar Hero World Tour Drums

I use libcwiid for my various hacks, and recently I wanted to connect to the GH4 drumkit (which has been documented thoroughly on wiibrew.org but I couldn't find any patches. After realizing that the libcwiid project is pretty much abandonware, I imported the SVN into mercurial and hacked in drum support.

The start was the patch for GH guitar identification (found here but it didn't properly implement the new detection scheme. So I cleaned that up first.

The code in general needs some love, and adding support for new devices breaks the ABI and API as it stands, yet that's fairly easy to fix. But I don't really want to adopt YA puppy right now...

So this patch should get you going on the drums! Send me mail if you want support for other devices (the GHWT guitar should be easy), or other patches. If there's enough interest I'll export the repository somewhere.

[/tech] permanent link

Tue, 14 Apr 2009

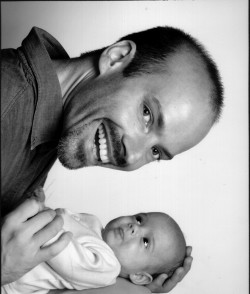

Gratuitous Arabella Pics

People do ask me how my daughter Arabella is going; she seems to be thriving on 4-5 days every fortnight with me. We go to the Central Markets to shop. We go swimming. We lay on the grass in the parklands over the road and read The Hobbit. We nap (alot).

She's a mostly-happy wonderful baby, and even my favorite photographs don't do her justice:

| Arabella December 2008 (Professional photographer) |

Arabella March 2009 (My sister) |

Arabella April 2009 (Avi Miller) |

|

|

|

[/self] permanent link

Tue, 07 Apr 2009

IBM LTC Tuz

|

In response to the LCA 2009 Dinner Auction which raised about A$40k to help save the Tasmanian Devil, Linus agreed to change the logo for the 2.6.29 release (making that patch was fun: who knew there was a PNM reader in the kernel source?) No surprise that Tuz has also been seen moonlighting around the IBM Linux Technology Center: |

|

[/tech] permanent link

Fri, 13 Mar 2009

Valid Uses of Macros

So, C has a preprocessor, and it can be used for evil: particularly function-style macros (#define func(arg)) are generally considered suspect. Old-timers used to insist all macros were SHOUTED, but it can make innocent (but macro-heavy) code damn ugly.

Remember, it's only a problem when it's Easy to Misuse, and if you've written something that's easy to misuse, maybe a rethink is better than an ALL CAPS warning.

There is one genuine and unescapable use for macros:

- Macros which deal with types, or take any type

- The classic here is

#define new(type) ((type *)malloc(sizeof(type)))

But consider also the Linux kernel's min() implementation:#define min(x,y) ({ \ typeof(x) _x = (x); \ typeof(y) _y = (y); \ (void) (&_x == &_y); \ _x < _y ? _x : _y; })which uses two GCC extensions to produce a warning if x and y are not exactly the same type.

And there are several justifiable but more arguable cases:

- Const-correct wrappers

- If you need to wrap a struct member access, it's annoying to do

it as an inline function. To be general a function needs to

take a const pointer argument, then cast away the const (see

strchr). Const exists for a reason, and stealing it from your callers

is a bad idea. A macro

#define tsk_foo(tsk) ((tsk)->foo)

maintains const correctness, at the slight cost of type safety (you could hand anything with a foo member there, though it's unlikely to cause problems and can be fixed with a more complex macro. - Debugging macros

- Generally just add __FILE__ and __LINE__ to a function call. The non-debug versions are generally real functions.

- Genuinely fancy tricks

- There's no good way around a macro for things like ARRAY_SIZE and BUILD_BUG_ON (these taken from CCAN):

#define ARRAY_SIZE(arr) (sizeof(arr) / sizeof((arr)[0]) + _array_size_chk(arr)) #define BUILD_ASSERT(cond) do { (void) sizeof(char [1 - 2*!(cond)]); } while(0)

More questionable still:

- Macros which declare things

- This is for things which need initialization, eg. LIST_HEAD in the kernel (or ccan/list) expects an "empty" list to be pointing to itself. While this is convenient, nothing else in C self-initializes so it's arguably better to provide an "EMPTY_LIST(name)" macro. You get a nice crash if you forget (except on stack vars).

- Macros which iterate

- list_for_each() (ccan/list.h version of the kernel's list_for_each_entry):

#define list_for_each(h, i, member) \ for (i = container_of_var(debug_list(h)->n.next, i, member); \ &i->member != &(h)->n; \ i = container_of_var(i->member.next, i, member))

It's less explicit, but much shorter than having three macros and using them to loop:for (i = list_start(&list, member); i != list_end(&list, member); i = list_next(&list, member, i))

If we sacrifice a little efficiency for convenience, we can make list_start() and list_next() evaluate to NULL at the end of the list, and I prefer it over the list_for_each() macro:for (i = list_start(&list, member); i; i = list_next(&list, member, i))

- Modify your arguments.

- C coders don't expect magic changes to parameters. From kernel.h:

#define swap(a, b) \ do { typeof(a) __tmp = (a); (a) = (b); (b) = __tmp; } while (0) - Embed control statements to places outside the macro.

- Putting 'return' in macros is only ok if the macro is called, say, COMPLAIN_AND_RETURN. And then it's probably still a bad idea.

- The classics: use too few brackets, or allow multi-evaluation.

- The former is unforgivable; it cost be 1/2 a day of my life once when I was younger and using another coder's RAD2DEG() macro. The latter can be avoided with gcc extensions (see min() above), or sometimes using sizeof().

[/tech] permanent link

Mon, 26 Jan 2009

linux.conf.au 2009

This is a braindump so I remember, not any kind of ordered report.

Newcomer's session worked well: it's not about the content so much as making people feel welcome and less lost. (It's also about them meeting each other, which is my excuse for a deliberately lacklustre presentation). I said "newbie" twice though, and I hate that word. And I am just not sure what to say to someone who says last year's was better.

Miniconfs are supposed to be more chaotic than the main conf, so that's my excuse for lacklustre presentation for the Kernel miniconf. It was basically a bullet list of what's been happening with cpumask et al. Linus was off scuba diving somewhere, but noone shouted me down as an idiot, so count it as a win. Paul McKenney's talk was good as always, but too long for the slot (he had to skip slides just as he was getting to the stuff I hadn't heard before).

Attended the Geek Parenting session at LinuxChix miniconf; my take-home point was about finding ways of encouraging strengths (kids hitting each other with sticks? How about fencing lessons?) but not giving up on activities where kids need to get over a hump (example was violin IIRC). (Other take-home point: I should ask Karen and Bdale for advice, since Edale turned out to so well...)

Monday evening spent worrying at my Free as In Freedom talk. One reason I was really hoping that Kim Weatherall would make it to Hobart was that it needed some tightening. However, when unhappy with the refinement of the content, you can always make up for it by doing something flashy and stupid.

Tuesday I was less coherent in my choices of what I attended (Monday was mainly kernel miniconf). My talk at the Free As In Freedom talk was lukewarm, but I ended with the definitely unrepeatable "Software Patents as Interpretive Dance". And I doubt the camera captured it.

Wednesday's keynote by Tom Limoncelli was good, but mis-aimed for most of the audience who are not sysadmins. He would probably have re-calibrated it if it had been later in the week and he'd had more exposure to us. Jeremy Kerr's spufs talk was solid, and he rightly spent more time on the userspace SPU programming interface than on the filesystem as a fileystem. Peter Hutterer's "Your input is important to us!" was a classic "here's where the cruft is and here's what we're doing about it" talk. Then came my Lguest Tutorial prep session and Part I.

After last year where almost noone sailed smoothly through the preparation, I spent much more time on preparing the images and kernel for everyone. That way you could either boot my kernel on your laptop (and live without some things working for the duration), or use kvm or even qemu to run my entire image.

Unfortunately I blew away two required files in a last-minute cleanup of the kernel tree (I pre-built it to save compile time, but it always links vmlinux so I deleted those files to save space). Getting those back inside the image was an exercise in pain, as I bzip2'd the image on the USB keys otherwise I could have mounted them in place and fixed it myself.

So instead of scaring people off my tutorial with my sheer competence, I scared them off with incompetence. Colour me deeply, deeply annoyed.

Wednesday was the Penguin Dinner; traditionally it'd be Friday night, but it was Wed in Melbourne because of the night market and that seems to have stuck. I used to say that I disliked the auction; it goes on too long and very quickly 99% of people can't bid any more. And let's be honest: I'm just not that interesting that you want to listen to me for half the evening. But the emergence of consortia in recent years has changed this: it's not really an auction at all any more but a chance to get people to pledge Random Cool Things. Proof: the final consortium bid against itself several times. And we're actually big enough to make a difference to a useful cause. We still need half-time entertainment or something...

Thursday was Angela Beesley's keynote: again I felt that her content could have been more focussed for this crowd (assume everyone knows the basics and talk more about interesting facts and details). Also she was nervous and followed her script at expense of showing passion (until questions).

My tutorial went well I think: more finely calibrated this year, in that everyone completed something, and at least two people completed the Advanced series of problems. I will have to add some more advanced tasks for 2010 (yes, I have to do it again: I'm still pissed off at my setup blunder). A few people repeated it from last year; is that good or bad?

I went to Jeff Arnold's ksplice talk; I like ksplice but I had some lingering questions. I ended by promising to review the code for him. I want this in my kernel, even if my distribution doesn't: we've done wackier things for less benefit.

I hit the end of Hugh's talk: seemed like quite a good "grab bag of tools and techniques" talk. As expected (having worked with Hugh of course) I had heard of most of them, but not all. One I will review on video where I can google while listening.

Friday came, and my first day with no presentation! Simon Phipps gave an excellent keynote. He showed himself to be part of our world and gave a nice high-level "here's how I (and to some extent, Sun) see things" without wandering into a product launch or equivalent. I know Richard Keech saw differently, but I don't think he misrepresented RedHat (at least, assuming the audience were clued up: I can see how a more general audience could have gained a distorted impression).

Kimberlee Cox's HyKim robotic bear talk was saved by the cool content, but she's not a strong speaker and several audience questions made her seem out of her depth on the details (I didn't understand their points either, so I can't be sure on this one). But I do know that sometimes speakers switch modes from general into specific when asked a detailed question and you get an insight into how much they've been holding back so as not to confuse/bore/intimidate you. I didn't get that here.

I skipped most of Bdale's "Free As In Beard" lunctime session (not my pun, but couldn't resist), but suffice to say I will neither be waxing my chest nor singing Queen songs next year. Honestly, noone wants that.

I was late to Adam Jackson's Shatter talk and then late to Rob Bradford's Clutter talk, so I wasted my time in both of them. My own fault, yet it annoys me every year when it happens.

In the morning I had volunteered to take care of the Lightning Talks, and then went and found Jeff Waugh to actually take care of them. He acked, and I didn't have to think about it again; of course, he did an awesome job. My contribution was to start (noone wants slot #1 it seems), and give a very quick and dirty plug for ccan and libantithread.

The Google Party, like the PDNS and Speaker's Dinner, was well done. Conrad Parker asked if anyone else had been to all 10 conferences; as far as I know, only he, Andrew Tridgell, Hugh Blemings and I have been to all of them since CALU. We should form some kind of Secret Society. And only Tridge has presented at every single one.

I also discussed with Dave Mandala an awesome project which would also make a great 2010 presentation if it comes together. 6am flight home on Saturday morning, and I am now mostly recovered.

[/tech] permanent link

Wed, 14 Jan 2009

libantithread 0.1

Finally, an antithread release!

|

|

|

|

|

(You can also download an Ogg Theora Video of each 1% improved frame).

Sometimes we use threads simply because using processes and shared memory is harder. But threads share far too much; libantithread is my solution.

Its in CCAN, and it's at the "useful demonstration" stage. It badly needs a nice load of documentation, but there are two example programs:

- A simple async DNS lookup engine. We fire off argc-1 antithreads to do the lookups.

- A more complex generic algorithm example, illustrated above. Random blended triangles try to match a given image.

Simplest is to download the CCAN tarball of everything, do a "make" and then go into ccan/ccan/antithread/examples and "make" there.

[/tech] permanent link

Wed, 07 Jan 2009

Fun with cpumasks

I've been meaning for a while to write up what's happening with cpumasks in the kernel. Several people have asked, and it's not obvious so it's worth explaining in detail. Thanks to Oleg Nesterov for the latest reminder.

The Problems

The two obvious problems are

- Putting cpumasks on the stack limits us to NR_CPUS around 128, and

- The whack-a-mole attempts to fix the worst offenders is a losing game.

For better or worse, people want NR_CPUS 4096 in stock kernels today, and that number is only going to go up.

Unfortunately, our merge-surge development model makes whack-a-mole the obvious thing to do, but the results (creeping in largely unnoticed) have been between awkward and horrible. Here's some samples across that spectrum:

- cpumask_t instead of struct cpumask. I gather that this is a relic from when cpus were represented by an unsigned long, even though now it's always a struct.

- cpu_set/cpu_clear etc. are magic non-C creatures which modify

their arguments through macro magic:

#define cpu_set(cpu, dst) __cpu_set((cpu), &(dst))

- cpumask_of_cpu(cpu) looked like this:

#define cpumask_of_cpu(cpu) (*({ typeof(_unused_cpumask_arg_) m; if (sizeof(m) == sizeof(unsigned long)) { m.bits[0] = 1UL<<(cpu); } else { cpus_clear(m); cpu_set((cpu), m); } &m; }))Ignoring that this code has a silly optimization and could be better written, it's illegal since we hand the address of a going-out-of-scope local var. This is the code which got me looking at this mess to start with. - New "_nr" iterators and operators have been introducted to only go to up to nr_cpu_ids bits instead of all the way to NR_CPUS, and used where it seems necessary. (nr_cpu_ids is the actual cap of possible cpu numbers, calculated at boot).

- Several macros contain implicit declarations in them, eg:

#define CPUMASK_ALLOC(m) struct m _m, *m = &_m ... #define node_to_cpumask_ptr(v, node) \ cpumask_t _##v = node_to_cpumask(node); \ const cpumask_t *v = &_##v #define node_to_cpumask_ptr_next(v, node) \ _##v = node_to_cpumask(node)

But eternal vigilance is required to ensure that someone doesn't add another cpumask to the stack, somewhere. This isn't going to happen.

The Goals

- No measurable overhead for small CONFIG_NR_CPUS.

- As little time and memory overhead for large CONFIG_NR_CPUS kernels booted on machined with small nr_cpu_ids.

- APIs and conventions that reasonable hackers can follow who don't care about giant machines, without screwing up those machines.

The Solution

These days we avoid Big Bang changes where possible. So we need to introduce a parallel cpumask API and convert everything across, then get rid of the old one.

- The first step is to introduce replacemenst for the cpus_*

functions. The new ones start with cpumask_; making names longer

is always a little painful, but it's now consistent. The few

operators which started with cpumask_ already were converted in

one swoop (they were rarely used). These new functions take

(const) struct cpumask pointers, and may only operate on some

number of bits (CONFIG_NR_CPUS if it's small, otherwise

nr_cpu_ids). This replacement is fairly simple, but you have to

watch for cases like this:

for_each_cpu_mask(i, my_cpumask) ... if (i == NR_CPUS)

That final test should be "(i >= nr_cpu_ids)" to be safe now:for_each_cpu(i, &my_cpumask) ... if (i >= nr_cpu_ids)

- The next step is to deprecate cpumask_t and NR_CPUS (CONFIG_NR_CPUS is now defined even for !SMP). These are minor annoyances but more importantly in a job this large and slow they mark where code needs to be inspected for conversion.

- cpumask_var_t is introduced to replace cpumask_t definitions (except in function parameters and returns, that's always (const) struct cpumask *). This is just struct cpumask[1] for most people, and alloc_cpumask_var/free_cpumask_var do nothing. Otherwise, it's a pointer to a cpumask when CONFIG_CPUMASK_OFFSTACK=y.

- alloc_cpumask_var currently allocates all CONFIG_NR_CPUS bits, and zeros any bits between nr_cpu_ids and NR_CPUS. This is because there are still some uses of the old cpu operators which could otherwise foul things up. It will be changed to do the minimal allocation as the transfer progresses.

- New functions are added to avoid common needs for temporary cpumasks. The two most useful are for_each_cpu_and() (to iterate over the intersection of two cpumasks) and cpu_any_but() (to exclude one cpu from consideration).

- Another new function added for similar reasons was work_on_cpu(). There was a growing idiom of temporarily setting the current thread's cpus_allowed to execute on a specific CPU. This not only requires a temporary cpumask, it is potentiall buggy since it races with userspace setting the cpumask on that thread.

- to_cpumask() is provided to convert raw bitmaps to struct cpumask *. In some few cases, cpumask_var_t cannot serve because we can't allocate early enough or there's no good place (or I was too scared to try), and I wanted to get rid of all 'struct cpumask' declarations as we'll see in a moment.

- Architectures which have no intention of setting CONFIG_CPUMASK_OFFSTACK need do very little. We should convert them eventually, but there's no real benefit other than cleanup and consistency.

- I took the opportunity to centralize the cpu_online_map etc definitions, because we're obsoleting them anyway.

- cpu_online_mask/cpu_possible_mask etc (the pointer versions of the cpu_online_map/cpu_possible_map they replace) are const. This means that the few cases where we really want to manipulate them, the new set_cpu_online()/set_cpu_possible() or init_cpu_online()/init_cpu_possible() should be used.

- We will change 'struct cpumask' to be undefined for CONFIG_CPUMASK_OFFSTACK=y. This can only be done once all cpumask_t (ie. struct cpumask) declarations are removed, including globals and from structures. Most of these are a good idea anyway, but some are gratuitous. But this will instantly catch any attempt to use struct cpumask on the stack, or to copy it (the latter is dangerous since cpumask_var_t will not allocate the entire mask).

Conclusion

At this point, we will have code that doesn't suck, rules which can be enforced by the compiler, and the possibility of setting CONFIG_NR_CPUS to 16384 as the SGI guys really want.

Importantly, we are forced to audit all kinds of code. As always, some few were buggy, but more were unnecessarily ugly. With less review happening these days before code goes in, it's important that we strive to leave code we touch neater than when we found it.

[/tech] permanent link