A recent post at http://jeffr-tech.livejournal.com/6268.html#cutid1 pointed to a possible Linux scalability problem when compared to FreeBSD.

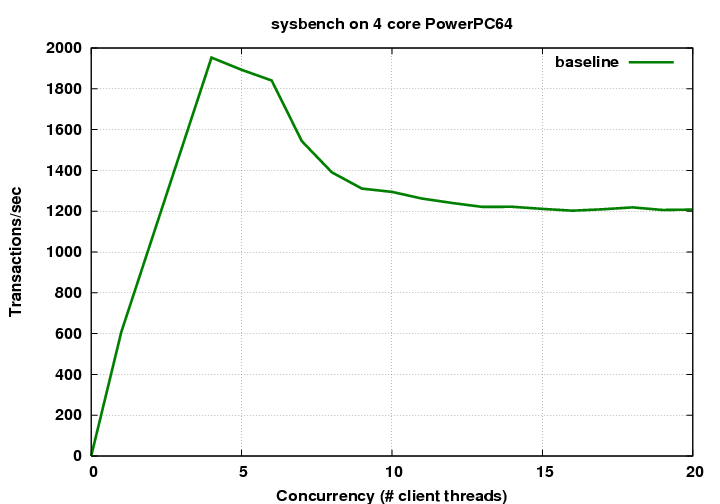

A baseline was taken to verify this setup sees the same scalability issue:

We see a problem when the number of benchmark threads exceeds the number of cores in the machine.

vmstat was used to get the overall state of system:

# vmstat 1 procs -----------memory---------- ---swap-- -----io---- -system-- ----cpu---- r b swpd free buff cache si so bi bo in cs us sy id wa 5 0 0 913296 107636 2784176 0 0 0 16 8625 131394 32 9 30 0 4 0 0 913440 107640 2784180 0 0 0 16 7598 130074 32 9 30 0 9 0 0 913268 107644 2784176 0 0 0 16 7952 131511 32 9 31 0 12 0 0 913120 107648 2784180 0 0 0 16 8254 134670 31 9 31 0 5 0 0 912796 107652 2784180 0 0 0 16 8277 132390 31 9 32 0 7 0 0 912772 107660 2784176 0 0 0 88 8316 133773 32 9 31 0 12 0 0 913148 107664 2784184 0 0 0 16 8620 132649 31 9 32 0 2 0 0 913168 107668 2784180 0 0 0 16 8296 132613 32 9 31 0 2 0 0 912772 107672 2784184 0 0 0 20 8521 131425 32 9 31 0

Notice the large amount of idle time (30-31%) and the very high context switch rate (130,000/sec).

Strace was used to trace one of the active mysqld threads:

# strace -p 12345

futex(0x10733a90, FUTEX_WAKE, 1) = 1

futex(0x10733a90, FUTEX_WAIT, 2, NULL) = 0

futex(0x10733a90, FUTEX_WAKE, 1) = 1

write(39, "\1\0\0\1\1*\0\0\2\3def\6sbtest\6sbtest\6sbte"..., 76) = 76

futex(0x10733a90, FUTEX_WAIT, 2, NULL) = -1 EAGAIN (Resource temporarily unavailable)

futex(0x10733a90, FUTEX_WAKE, 1) = 1

sched_setscheduler(20760, SCHED_OTHER, { 8 }) = -1 EINVAL (Invalid argument)

time([1173733352]) = 1173733352

read(39, "\n\0\0\0", 4) = 4

read(39, "\27\v\0\0\0\0\1\0\0\0", 10) = 10

time([1173733352]) = 1173733352

sched_setscheduler(20760, SCHED_OTHER, { 6 }) = -1 EINVAL (Invalid argument)

write(39, "\7\0\0\1\0\0\0\2\0\0\0", 11) = 11

sched_setscheduler(20760, SCHED_OTHER, { 8 }) = -1 EINVAL (Invalid argument)

time([1173733352]) = 1173733352

read(39, "\6\0\0\0", 4) = 4

read(39, "\3BEGIN", 6) = 6

time([1173733352]) = 1173733352

sched_setscheduler(20760, SCHED_OTHER, { 6 }) = -1 EINVAL (Invalid argument)

write(39, "\7\0\0\1\0\0\0\3\0\0\0", 11) = 11

sched_setscheduler(20760, SCHED_OTHER, { 8 }) = -1 EINVAL (Invalid argument)

time([1173733352]) = 1173733352

read(39, "\20\0\0\0", 4) = 4

read(39, "\27\1\0\0\0\0\1\0\0\0\0\0u\23\0\0", 16) = 16

time([1173733352]) = 1173733352

sched_setscheduler(20760, SCHED_OTHER, { 6 }) = -1 EINVAL (Invalid argument)

time([1173733352]) = 1173733352

time(NULL) = 1173733352

Notice the repeated failing calls to sched_setscheduler. It looks like mysql is trying to change thread priority but this doesnt have the intended effect on Linux. In particular it looks like the following code in mysys/my_pthread.c in MySQL needs some attention:

#if (defined(__BSD__) || defined(_BSDI_VERSION)) && !defined(HAVE_mit_thread)

#define SCHED_POLICY SCHED_RR

#else

#define SCHED_POLICY SCHED_OTHER

#endif

uint thd_lib_detected= 0;

#ifndef my_pthread_setprio

void my_pthread_setprio(pthread_t thread_id,int prior)

{

#ifdef HAVE_PTHREAD_SETSCHEDPARAM

struct sched_param tmp_sched_param;

bzero((char*) &tmp_sched_param,sizeof(tmp_sched_param));

tmp_sched_param.sched_priority=prior;

VOID(pthread_setschedparam(thread_id,SCHED_POLICY,&tmp_sched_param));

#endif

}

#endif

As a quick fix, we can override sched_setscheduler via an LD_PRELOAD hack:

# cat override_sched_setscheduler.c

#include <unistd.h>

#include <sched.h>

int sched_setscheduler(int pid, int policy, const struct sched_param *param)

{

return 0;

}

# gcc -O2 -shared -fPIC -o override_sched_setscheduler.so override_sched_setscheduler.c

# LD_PRELOAD=override_sched_setscheduler.so /usr/sbin/mysqld

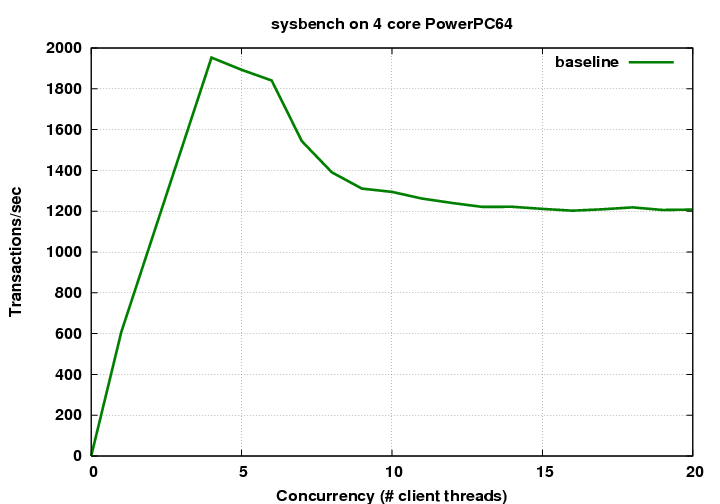

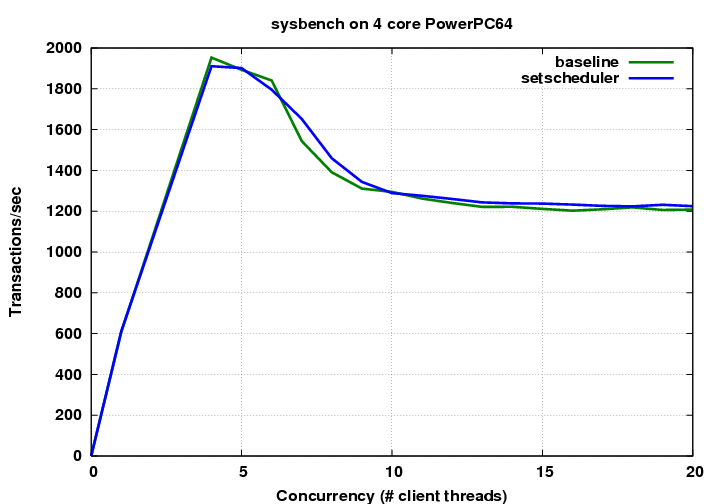

At this stage another run was taken:

While it looks to have helped slightly, the scalability issue remains.

The high context switch rate and large amounts of idle cpu suggested a scheduling problem. With the strace output showing lots of futex calls, it further suggested pthread mutex or condition variable contention.

gdb was used to attach to mysqld while the benchmark was running. A backtrace of all processes was then taken.

# gdb /usr/sbin/mysqld 12345 (gdb) thread apply all backtrace ...

Many of the threads were blocking on a mutex in heap_open and heap_close:

#0 0x0ff573c8 in __lll_lock_wait () from /lib/tls/libpthread.so.0 #1 0x0ff51cdc in pthread_mutex_lock () from /lib/tls/libpthread.so.0 #2 0x1041c44c in heap_close () #3 0x10252d44 in ha_heap::close () #4 0x101c23a0 in free_tmp_table () #5 0x101cf5ac in JOIN::destroy () #6 0x102a0fc0 in st_select_lex::cleanup () #7 0x101e1d44 in mysql_select () #8 0x101e26c8 in handle_select () #9 0x1018bb6c in mysql_execute_command () #10 0x101ec414 in Prepared_statement::execute () #11 0x101ec8c4 in mysql_stmt_execute () #12 0x10192d5c in dispatch_command () #13 0x10193f94 in do_command () #14 0x101949c0 in handle_one_connection () #15 0x0ff50618 in start_thread () from /lib/tls/libpthread.so.0

It turns out MySQL has a global mutex (THR_LOCK_heap) protecting the code in heap/*. Initial attempts to smash up this mutex produced mixed results, however it would be worth looking at this issue in the future.

After looking at the gdb backtraces again, it was noticed that the code in heap/* was doing memory allocation while under the THR_LOCK_heap mutex. In fact the glibc free routine was blocking on its own mutex when holding the THR_LOCK_heap mutex:

#0 0x0fc538a8 in __lll_lock_wait () from /lib/tls/libc.so.6 #1 0x0fbdb0a0 in free () from /lib/tls/libc.so.6 #2 0x1042b458 in my_no_flags_free () #3 0x102522c4 in ha_heap::create () #4 0x102531c0 in ha_heap::open () #5 0x1024db80 in handler::ha_open () #6 0x101c9ae0 in create_tmp_table () #7 0x101dc6b0 in JOIN::optimize () #8 0x101e1ccc in mysql_select () #9 0x101e26c8 in handle_select () #10 0x1018bb6c in mysql_execute_command () #11 0x101ec414 in Prepared_statement::execute () #12 0x101ec8c4 in mysql_stmt_execute () #13 0x10192d5c in dispatch_command () #14 0x10193f94 in do_command () #15 0x101949c0 in handle_one_connection () #16 0x0ff50618 in start_thread () from /lib/tls/libpthread.so.0

The glibc malloc library is known to have issues with threading. Since these allocation calls are all wrapped in the THR_LOCK_heap mutex, these negative effects could be multiplied.

Google have written an alternate malloc library that is known to perform better with threads. Debian has packaged it, so it is a simple matter of downloading and using LD_PRELOAD again:

# apt-get install libgoogle-perftools0 # LD_PRELOAD=/usr/lib/libtcmalloc.so:override_sched_setscheduler.so /usr/sbin/mysqld

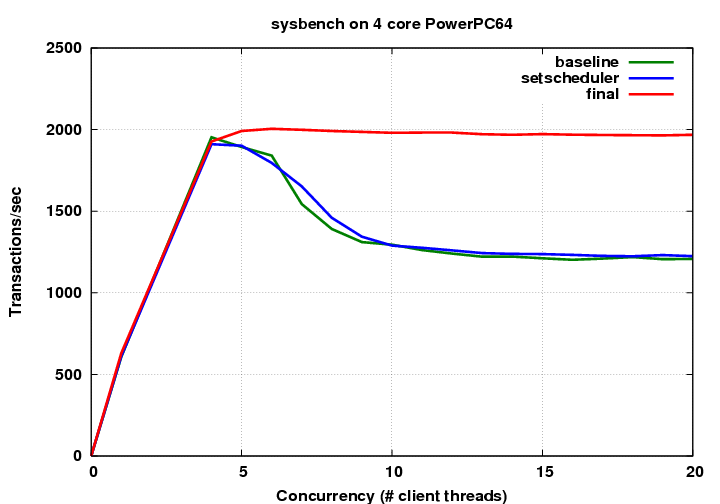

A run was done with both the setscheduler hack and the google malloc library:

Replacing the glibc malloc with the google malloc has fixed the scalability issue.